K8s API 核心对象 —— client-go

· ☕ 3 分钟

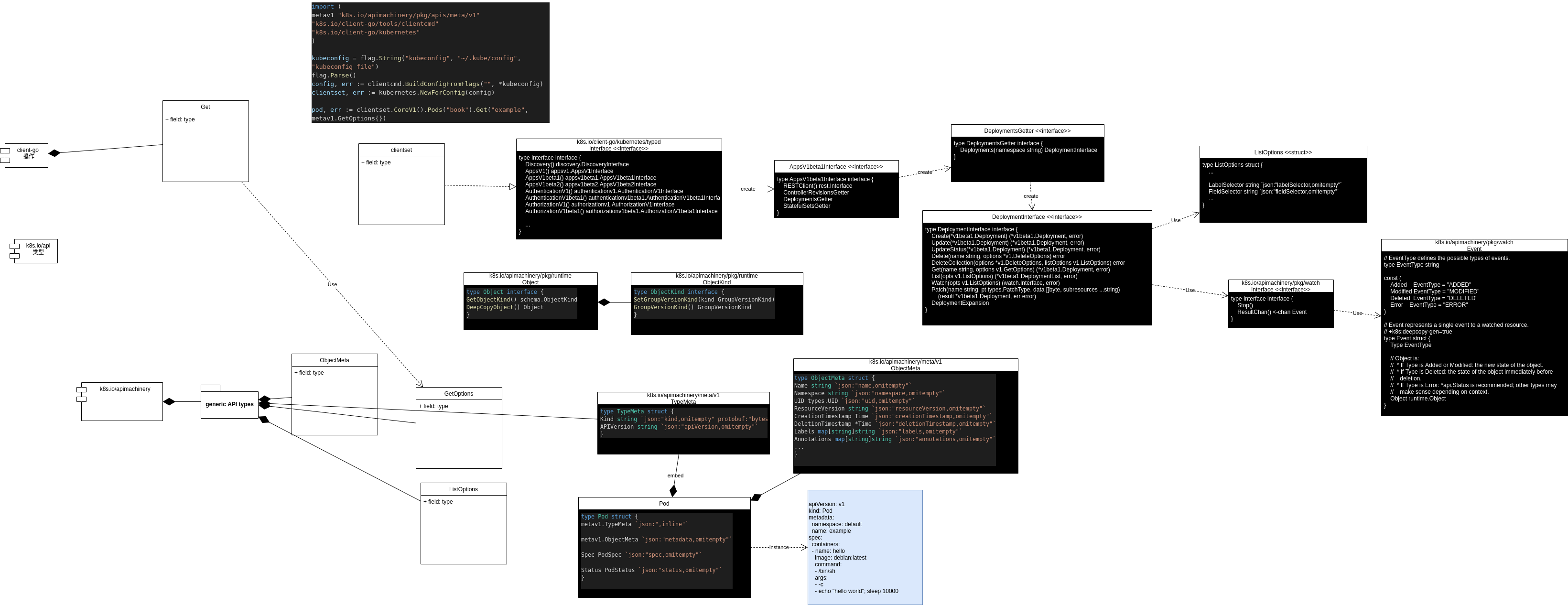

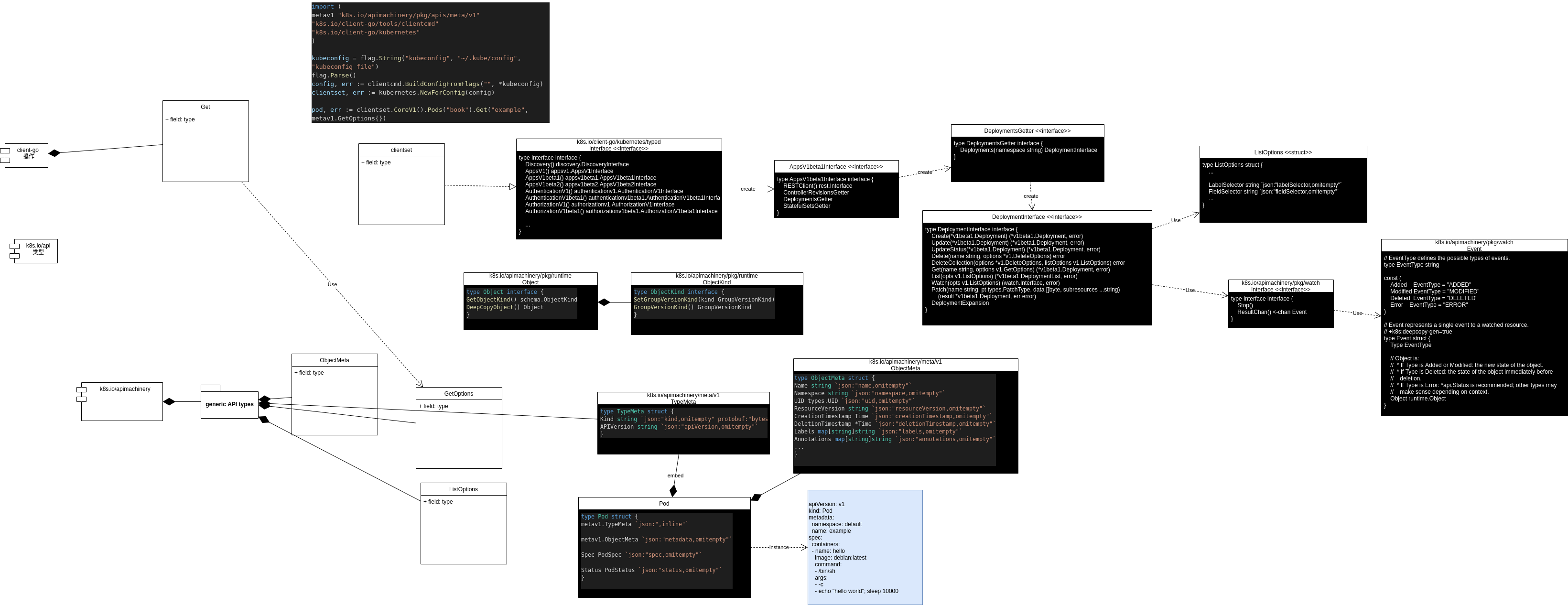

API 入口

Client Sets

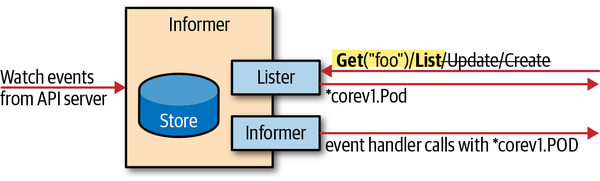

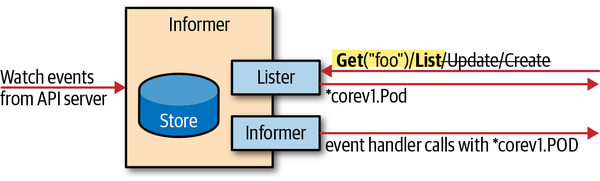

接收变更通知和缓存(Informers and Caching)

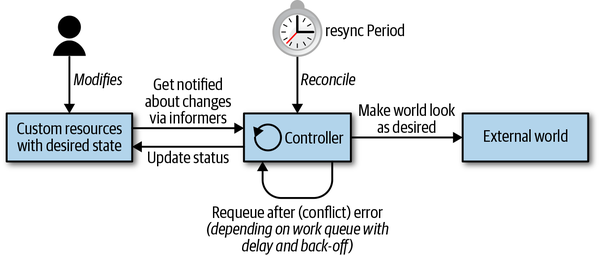

Client Sets可以 watch 变更,但一般我们用更高级的 Informers,因为它有缓存、索引等功能。

- Lister :被应用调用,返回缓存中的数据列表

- Informer:监听器

Informer 有两个功能

寫點東西吧,懒人。

Client Sets

Client Sets可以 watch 变更,但一般我们用更高级的 Informers,因为它有缓存、索引等功能。

Informer 有两个功能

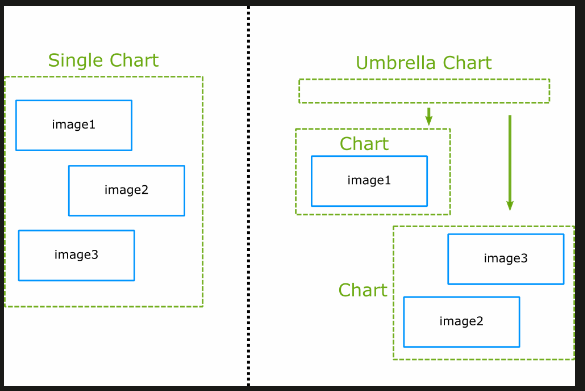

you can also create a chart with dependencies to other charts (a.k.a. umbrella chart) which are completely external using the requirements.yaml file.

https://codefresh.io/docs/docs/new-helm/helm-best-practices/

最近由于工作需要,重新系统回顾 Kubernetes 的编程。发现《Programming Kubernetes》这书写得比较系统。于是边学,边记录一些重点。

本文状态:草稿

|

|

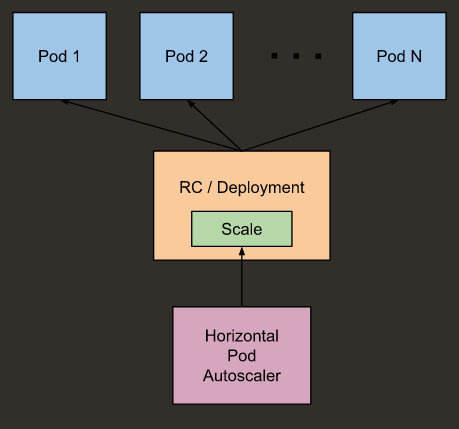

desiredReplicas = ceil[currentReplicas * ( currentMetricValue / desiredMetricValue )]

currentMetricValue 为相关 pod 的 metric 平均数。

共享内存,多进程/线程的运行期设计模式已成主流的今天,你有好奇一下,进程/线程间的怎么同步的吗?大部分人知道,我们用的开发语言,或类库函数库,已经提供了看起来很漂亮的封装。然而在漂亮的面子工程后面,大部分归根到底是要内核 或/和 CPU 硬件去完成这些同步的。而反过来,只要我们理解了内部原理,你就可以快速理解那些漂亮的面子工程,和他们可能的性能问题,进而选择一个适合你的“面子工程”。而这些内部原理,就是同步原语。

VirtualService:

|

|

DestinationRule :

|

|

使用 sourceLabels 规则,可以根据源 pod 的 label 进行路由。这里用了 version 这个 label。即根据pod的应用版本进行路由。

这样的路由规则实际是使用于发起调用方的 sidecar。

|

|

|

|

然后在另外一个终端中

|

|

可以看到在 bpftrace 终端中输出:

From [Understanding The Linux Kernel]

之前我们了解到,Linux 倾向用最多的内存做 Page Cache。这使我们不得不考虑如何在内存不足前回收内存。问题是,回收内存的程序本身也可能有 IO 操作,也可能需要内存。

page cache 中的每个 page 均归属于文件. 这个文件 — 或更精确来说,是文件的 inode 被称为 page 的owner.

Page cahce 的核心数据结构是 addrees_space。一般来说,每个 inode (Kernel 用来存放文件元信息的内存中的数据结构,可以视为一个文件的描述信息)中包含一个 addrees_space 。

https://tenzir.com/blog/production-debugging-bpftrace-uprobes/

https://shaharmike.com/cpp/vtable-part1/

|

|

$ # compile our code with debug symbols and start debugging using gdb

$ clang++ -std=c++14 -stdlib=libc++ -g main.cpp && gdb ./a.out

...

(gdb) # ask gdb to automatically demangle C++ symbols

(gdb) set print asm-demangle on

(gdb) set print demangle on

(gdb) # set breakpoint at main

(gdb) b main

Breakpoint 1 at 0x4009ac: file main.cpp, line 15.

(gdb) run

Starting program: /home/shmike/cpp/a.out

Breakpoint 1, main () at main.cpp:15

15 Parent p1, p2;

(gdb) # skip to next line

(gdb) n

16 Derived d1, d2;

(gdb) # skip to next line

(gdb) n

18 std::cout << "done" << std::endl;

(gdb) # print p1, p2, d1, d2 - we'll talk about what the output means soon

(gdb) p p1

$1 = {_vptr$Parent = 0x400bb8 <vtable for Parent+16>}

(gdb) p p2

$2 = {_vptr$Parent = 0x400bb8 <vtable for Parent+16>}

(gdb) p d1

$3 = {<Parent> = {_vptr$Parent = 0x400b50 <vtable for Derived+16>}, <No data fields>}

(gdb) p d2

$4 = {<Parent> = {_vptr$Parent = 0x400b50 <vtable for Derived+16>}, <No data fields>}

Here’s what we learned from the above: