Figure: agentgateway proxies the outbound connections of an Agent, including the MCP server, AI Agent, and OpenAPI

(source: https://agentgateway.dev/docs/about/architecture/)

Overview

This article analyzes the source code of agentgateway to provide a preliminary understanding of its main initialization process. It aims to offer a high-level reference and guidance for readers interested in diving deeper into the implementation of agentgateway.

As my understanding of Rust, especially the asynchronous programming style of tokio, is limited, please point out any errors or omissions.

Introduction

A person who practices critical thinking must first answer the question: why do we need another proxy? Aren’t Nginx/HAProxy/Envoy Gateway… enough?

The reason a new gateway is needed, instead of simply reusing existing API Gateways, Service Meshes, or traditional reverse proxies, is that AI agent systems have unique requirements that existing gateways cannot meet well:

- Protocol Differences

Interactions between AI agents often use new protocols like MCP and A2A, which involve long-lived connections, bidirectional communication, and asynchronous message streams, unlike the HTTP/REST or gRPC models primarily supported by traditional gateways. - State and Session Management

Traditional gateways are mostly stateless forwarders, whereas agent interactions typically require maintaining long-term context, identity status, and session information. - Security and Multi-tenancy

The agent ecosystem requires more fine-grained authentication, authorization, and isolation capabilities to ensure that tools can be shared securely and reliably among different users and agents. - Observability and Governance

The call chains and event streams of AI agents are complex, demanding stronger capabilities for monitoring, metrics collection, tracing, and traffic governance, areas where traditional gateways are relatively weak. - Tool and Service Virtualization

Agentgateway can unify, abstract, register, and expose disparate MCP tools or external APIs, making it convenient for agents to discover and invoke them dynamically.

Existing gateways are not well-suited for AI agent scenarios in terms of protocol support, state management, security, and observability, thus necessitating a new generation of gateway specifically designed for agents.

The comparison table below lists the differences between a Traditional API Gateway and Agentgateway across several key capabilities:

| Capability/Feature | Traditional API Gateway | Agentgateway |

|---|---|---|

| Protocol Support | Primarily HTTP/REST, gRPC | Designed for agent protocols like MCP, A2A; supports bidirectional, asynchronous communication |

| Connection Model | Short-lived connections, request-response | Long-lived connections, bidirectional streams, stateful sessions |

| State Management | Stateless forwarding | Maintains agent sessions, context, and identity |

| Security Control | Authentication, Authorization (biased towards API users) | Fine-grained access control, multi-tenant isolation, supports tool-level authorization |

| Observability | Basic logs/metrics | Call chain tracing, event monitoring, and metrics collection specifically for agents |

| Traffic Governance | Rate limiting, circuit breaking, routing | Intelligent governance based on agent behavior (session-level/tool-level) |

| Tool Virtualization/Discovery | None | Supports MCP tool/service registration, abstraction, and dynamic discovery |

| Typical Scenarios | Traditional API management, client-facing | AI agent platforms, interconnection of tools/LLMs/agents |

As you can see, Agentgateway is not positioned to replace traditional API Gateways or Service Meshes, but rather to fill the gaps in protocol adaptation, session management, security, and governance for AI agent scenarios.

Agentgateway Introduction

Agentgateway is an open-source, cross-platform data plane designed specifically for AI agent systems. It establishes secure, scalable, and maintainable bidirectional connections between agents, MCP tool servers, and LLM providers. It addresses the shortcomings of traditional gateways in handling MCP/A2A protocols, such as state management, long sessions, asynchronous messaging, security, observability, and multi-tenancy. It provides enterprise-grade capabilities like unified access, protocol upgrades, tool virtualization, authentication and authorization, traffic governance, and metrics/tracing. It also supports the Kubernetes Gateway API, dynamic configuration updates, and an embedded developer self-service portal to help rapidly build and scale agent-based AI environments.

The above is an AI-generated introduction. Let me put it in plain language :) I believe that at its current stage, agentgateway is more like an outbound bus (an external dependency bus) for AI Agent applications.

Code Structure

It should be considered a typical Rust project. I’ve just recently picked up Rust again, so don’t hit me if I’m wrong. Cargo.toml lists all the dependencies. There are also a few .md documents. I won’t go into the standard README.md, but DEVELOPMENT.md is a good starting point for development operations.

I was a bit surprised when I saw CODE_OF_CONDUCT.md:

|

|

Isn’t it interesting that an AI-oriented project prohibits the use of AI for certain key tasks?

This is a typical Rust project based on a Cargo Workspace, meaning it consists of multiple interrelated independent packages (called crates). Below is an explanation of the main modules and key locations:

Top-level Structure

Cargo.toml: This is the root configuration file for the Workspace. It defines whichcratesare included in the entire workspace and may define dependencies and configurations shared by all members.crates/: This is the core source code directory of the project. All the Rust logic is stored here, and each subdirectory is an independentcrate.ui/: This is a frontend project. Judging from thenext.config.tsandpackage.jsonfiles, it is a web user interface built with Next.js (React). It is separate from the backend Rust code.examples/: Contains various usage examples. Each subdirectory (e.g.,basic,tls,openapi) demonstrates a specific feature or configuration ofagentgateway, which is very helpful for understanding how to use this project.schema/: Stores the data structure definitions (Schema) for configuration files.local.jsonandcel.jsondefine the format and validation rules for the configuration files.go/: Contains some Go language source code. Judging by the*.pb.gofilenames, this is auto-generated code from Protocol Buffers (proto) definitions, used for inter-language (Rust/Go) service communication.architecture/: Contains project architecture design documents.

Important Modules (Crates) in the crates/ Directory

This is the core of the Rust code, where each crate has a clear responsibility:

agentgateway: Core Proxy Logic Crate.- Location:

crates/agentgateway/ - Description: This is the project’s main library, implementing all core functionalities such as the Proxy, routing, middleware, etc.

- Location:

agentgateway-app: Application Entry Point Crate.- Location:

crates/agentgateway-app/ - Description: This is a binary

crate. Its main role is to reference theagentgatewaylibrarycrateand build the final executable. The program’smainfunction should be here. This is a common “library + application” separation pattern in Rust projects.

- Location:

core: Core Types and Utilities Crate.- Location:

crates/core/ - Description: Typically used to store basic data structures, traits, constants, and utility functions shared by multiple other

cratesin the workspace.

- Location:

xds: xDS API Implementation Crate.- Location:

crates/xds/ - Description: “xDS” is a set of discovery service APIs widely used in the cloud-native space (especially in the Envoy proxy). This

cratelikely implements an xDS client for dynamically fetching configurations (like routes, service discovery, etc.) from a control plane. Theprotosubdirectory andbuild.rsfile confirm that it needs to compile Protobuf files.

- Location:

hbone: HBONE Protocol Crate.- Location:

crates/hbone/ - Description: HBONE (HTTP-Based Overlay Network Encapsulation) is a tunneling protocol used in Istio for zero-trust networking. This

crateis specifically responsible for handling HBONE connections and communication.

- Location:

a2a-sdk: Agent-to-Agent SDK Crate.- Location:

crates/a2a-sdk/ - Description: Agent-to-Agent SDK.

- Location:

mock-server: Mock Server for Testing.- Location:

crates/mock-server/ - Description: Used to simulate backend HTTP services in integration or end-to-end tests.

- Location:

xtask: Build and Task Automation Crate.- Location:

crates/xtask/ - Description: This is a popular pattern for writing project automation scripts (like build, test, deploy) in Rust, as an alternative to

Makefileor shell scripts.

- Location:

Summary

This project is a feature-rich service gateway whose architecture clearly separates different concerns:

- Core business logic is in

crates/agentgateway. - The program entry point is in

crates/agentgateway-app. - Underlying network protocols (like HBONE, xDS) are abstracted into separate

crates(hbone,xds). - The user interface (

ui/) and the backend (crates/) are completely decoupled. - Examples (

examples/) provide a rich set of use cases and are a good starting point for learning and usage.

Development Environment

I use a vscode container. Because it has a .devcontainer.json, running the development environment in a container has the advantage that each environment configuration is consistent, reducing many dependency, toolchain, version, and communication cost issues.

To read the source code, especially Rust code with the tokio asynchronous style, I need to install an AI assistant in vscode: Gemini Code Assist.

Debug agentgateway

vscode will generate launch.json:

|

|

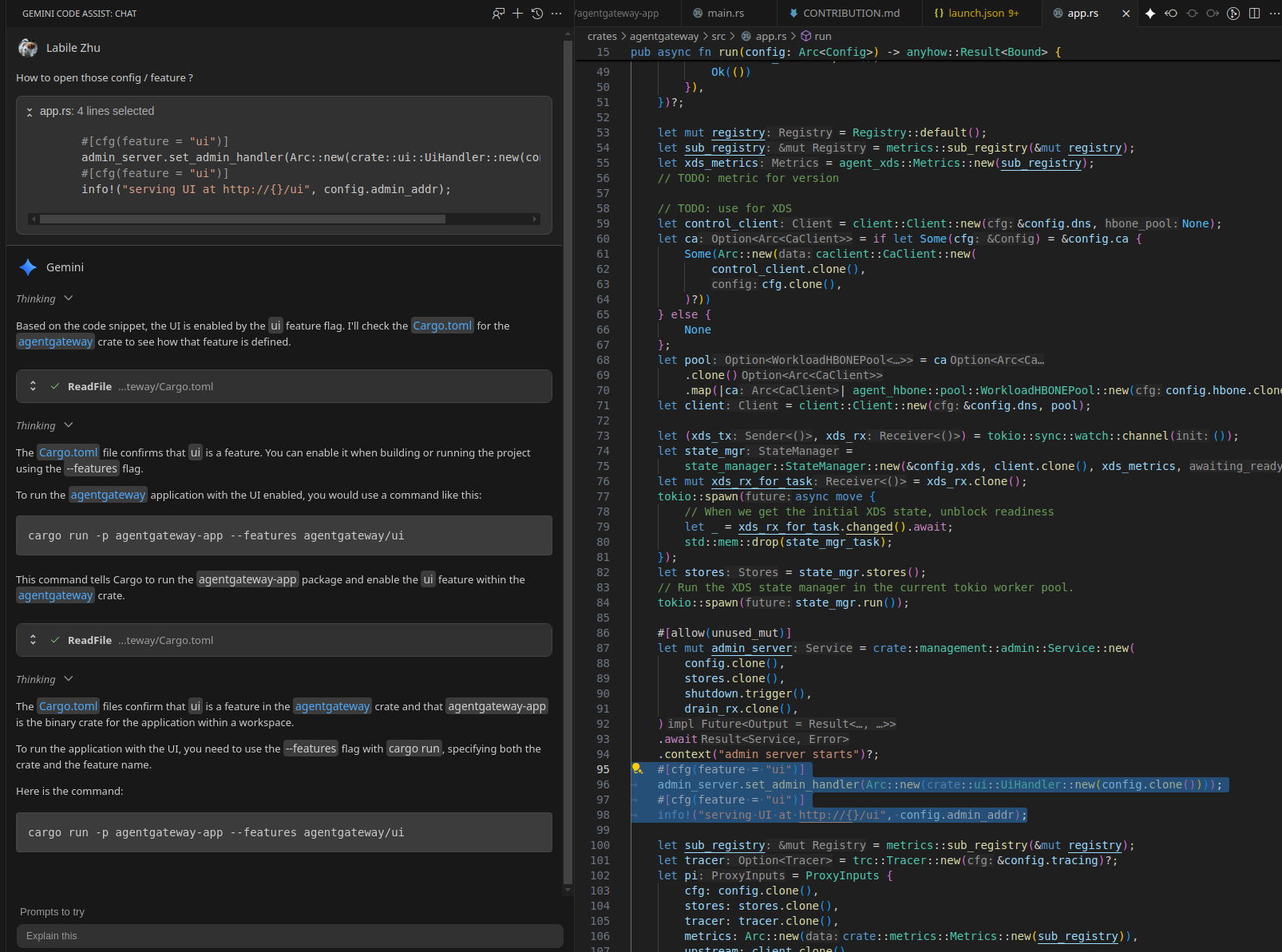

It’s worth noting that I added --features to the cargo configuration. This is equivalent to a MACRO configuration in a C++ project. You must enable the agentgateway/ui feature to have the UI functionality, which makes the following code take effect:

|

|

BTW, you need to install npm to build the UI.

Implementation Breakdown

If you suspect the content above was AI-generated, then what follows is the human-written core content of this article.

Initialization Process

Next, let’s talk about the initialization process and the threading model. There are three main types of threads:

- main thread, thread name

agentgateway - main spawn thread, thread name is also

agentgateway - agentgateway workers, thread name format:

agentgateway-N

The image above has formatting issues, please click here to open it with Draw.io

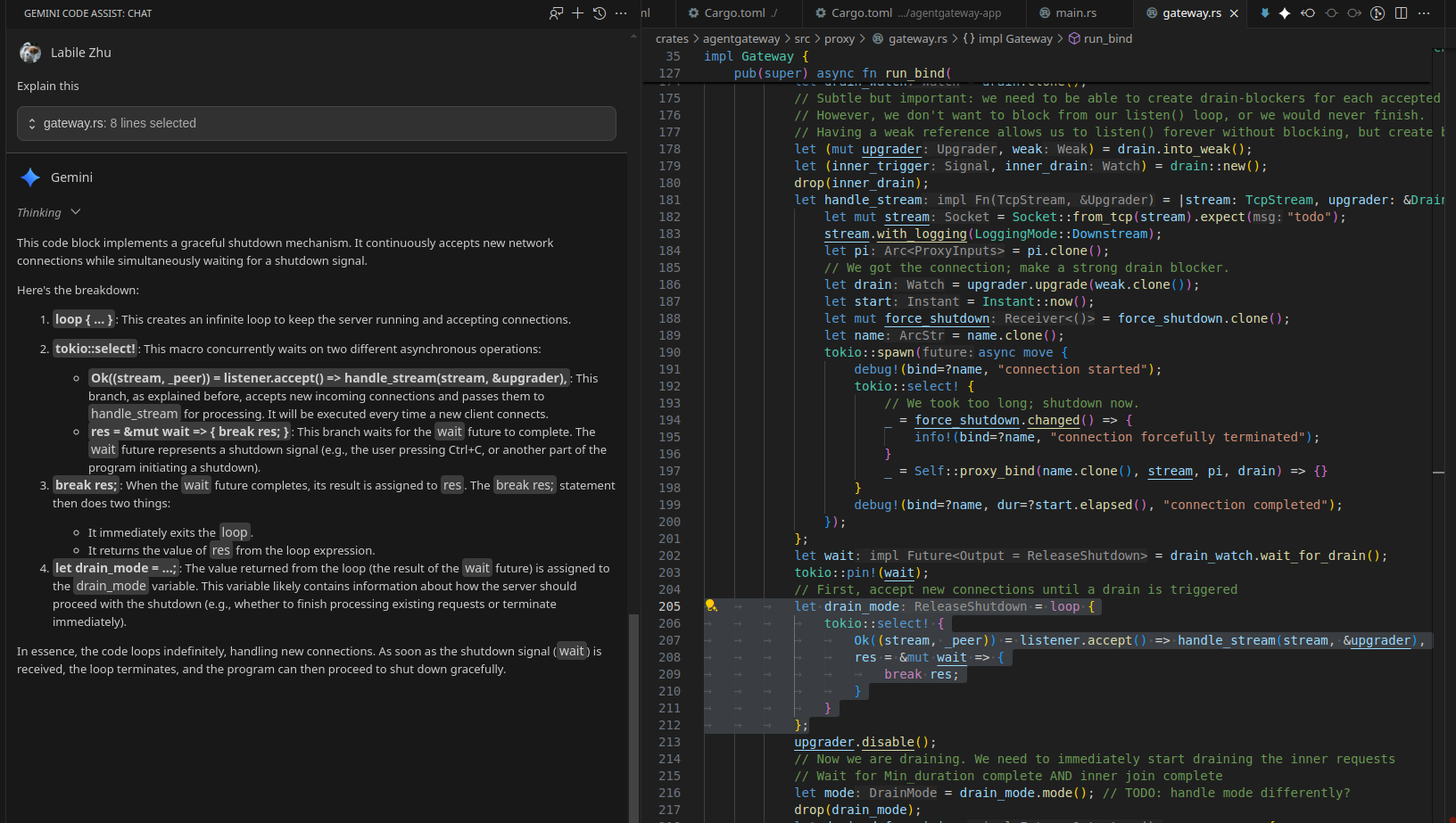

Browsing code written in this Rust + tokio asynchronous style can be a bit brain-draining for a Rust novice. Fortunately, I have experience with Go’s Goroutines, so I can roughly see the pattern. Each thread can bind a tokio::runtime::Runtime (equivalent to a scheduler with a thread pool) in its context. Pay attention to all “spawn”-related code.

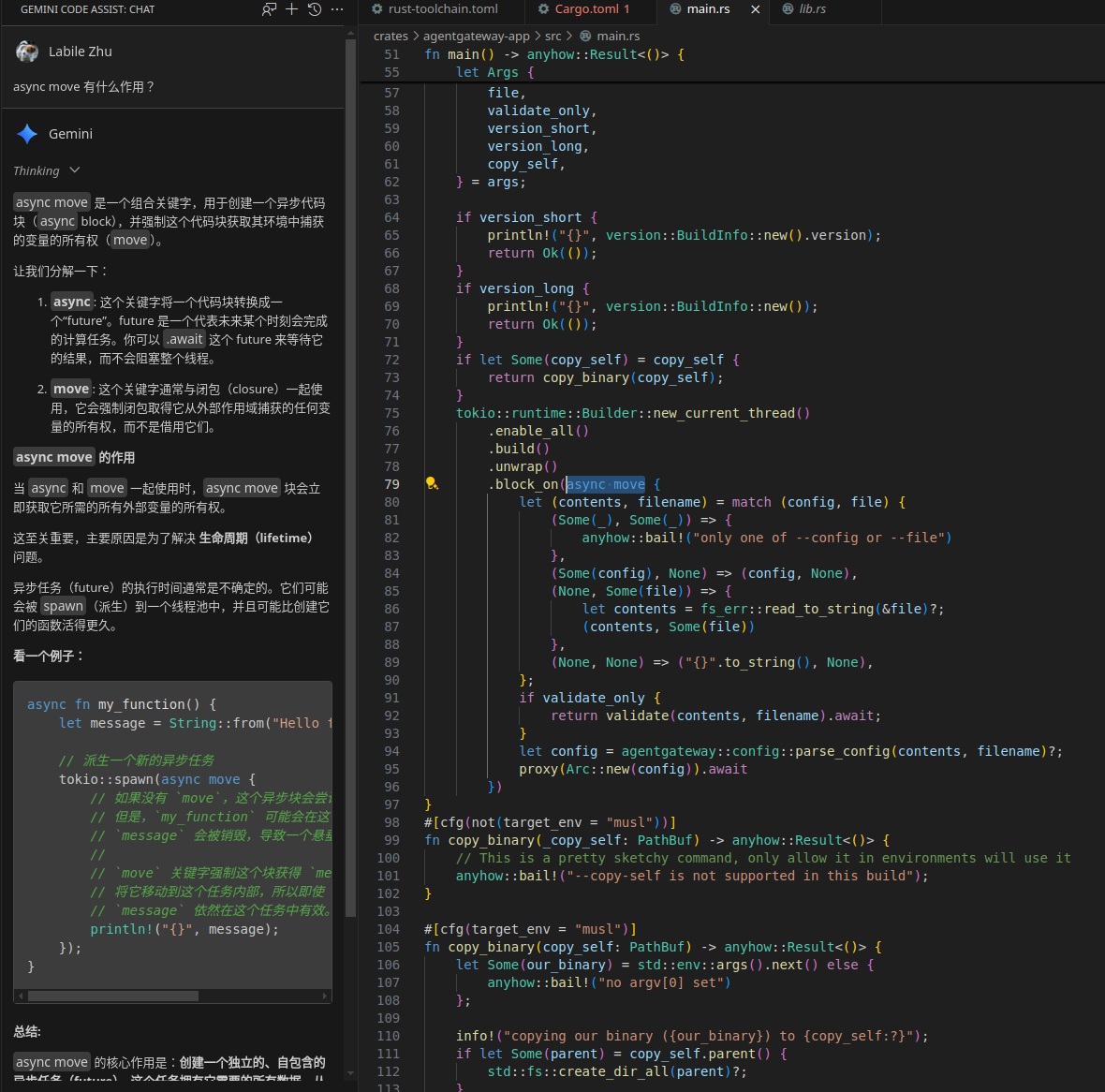

The AI Magic of Reading Code

Code in this Rust + tokio asynchronous style is not very friendly to newcomers. I installed an AI assistant in vscode: Gemini Code Assist. This allowed me to start reading the project’s code without first reading a thick Rust book. This method of learning a programming language by directly reading open-source code is also much more interesting than the traditional step-by-step approach (though it can feel less solid at times). If you think “Vibe Coding” sounds a bit absurd, then “Vibe Code Reading” is much more reliable. Here are some examples:

The difference from a search engine is that it answers questions directly within the context of your work, without you needing to manually extract and refine the information. Perhaps in the age of AI, knowing how to ask good questions has become even more important.